The Truth Behind AWS CEO Matt Garman’s Bold Bet on AI and Its Risks

Table of Contents

The AI Gamble

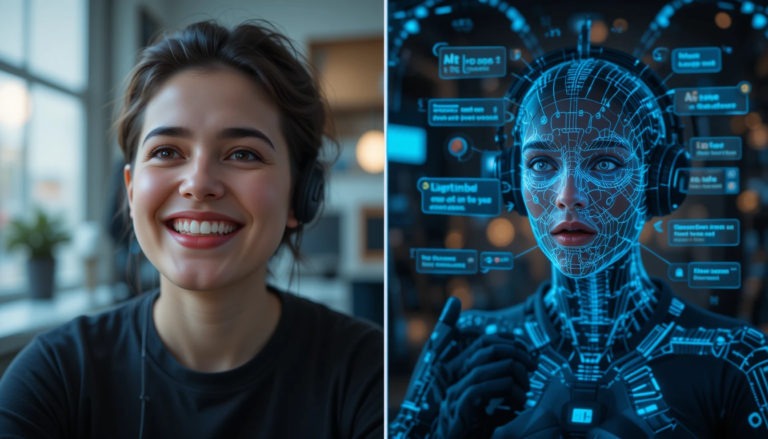

Artificial intelligence has become a driving force in technological innovation, with companies across various sectors investing heavily to harness its potential. Amazon Web Services (AWS), under the leadership of CEO Matt Garman, is no exception.

Matt Garman has been steering AWS toward substantial investments in AI infrastructure and services, aiming to position the company at the forefront of this transformative technology. However, such a bold strategy comes with its own set of challenges and risks.

AWS’s AI Investments: A Closer Look

Imagine you’re leading one of the world’s most successful cloud computing platforms, and everyone’s eyes are glued to the AI race. That’s the position AWS CEO Matt Garman is in, and he’s not holding back. AWS is pouring billions into AI, and it’s not just a random gamble—it’s a well-calculated bet on the future of computing.

Let’s talk numbers first. AWS plans to spend $11 billion in Georgia alone to expand its cloud and AI infrastructure. That’s a jaw-dropping figure! This investment will bolster their data centers, which are the backbone of their cloud services. Why Georgia? It’s a strategic location with access to a highly skilled workforce, reliable energy, and strong connectivity—key elements for running a global cloud empire.

Now, let’s zoom in on their custom chips, which are the crown jewels of AWS’s AI push. You’ve probably heard about Trainium and Inferentia. These aren’t just buzzwords—they’re designed to handle massive AI workloads with maximum efficiency. Trainium focuses on training machine learning models, while Inferentia is optimized for inference tasks, which is basically making predictions based on trained models.

Here’s where it gets exciting. Compared to standard GPUs, these chips can deliver up to 40% better price-performance, which is a game-changer for companies using AWS for AI. Imagine a startup developing cutting-edge AI for healthcare but constrained by budget. With Trainium and Inferentia, they can train models faster and at a fraction of the cost, opening doors to innovation that was previously out of reach.

And AWS isn’t just focusing on hardware. Their SageMaker platform is another big deal. It’s like the Swiss Army knife of machine learning, letting users build, train, and deploy AI models all in one place. SageMaker has tools for everything, from simple data labeling to advanced model optimization. According to AWS, companies using SageMaker have seen a 54% reduction in time-to-deployment for machine learning models. That’s massive when you consider the time-sensitive nature of industries like finance and healthcare.

But here’s the kicker: AWS isn’t just creating tools—they’re building an ecosystem. By investing in partnerships with AI research firms like Anthropic, AWS is ensuring that its technology aligns with cutting-edge advancements. These collaborations not only boost AWS’s credibility but also position them as thought leaders in the AI space.

The Rationale Behind the AI Push

Now, you might be wondering—why is AWS doubling down on AI so aggressively? The answer is simple yet profound: the future of cloud computing is AI-driven. Let me break it down for you.

First, let’s talk market dynamics. According to Gartner, the global AI market is projected to grow from $136 billion in 2022 to over $1.5 trillion by 2030. That’s a tenfold increase in less than a decade! AWS knows that the companies dominating AI will control the next wave of technological innovation, and they’re determined to stay ahead.

Think about it: everything is becoming AI-enabled. Whether it’s autonomous vehicles, personalized healthcare, or even fraud detection in banking, AI is transforming industries at an unprecedented pace. AWS recognizes that its clients need robust, scalable, and cost-effective solutions to keep up with this transformation. By offering custom chips and platforms like SageMaker, AWS ensures that it’s the go-to cloud provider for AI workloads.

Here’s another angle: data gravity. In tech, data attracts more data, and the more data a company has, the smarter its AI becomes. AWS’s strategy is to lock in customers by offering end-to-end AI services that make switching to competitors like Microsoft Azure or Google Cloud too costly and complex. It’s a classic “moat” strategy, but in the high-stakes world of AI.

And let’s not forget about edge computing. With the rise of IoT devices, there’s a growing need for real-time AI processing at the edge—think smart cameras in retail or sensors in autonomous vehicles. AWS’s investments in AI infrastructure position them to dominate this emerging field, providing low-latency solutions that competitors might struggle to match.

But there’s also a cultural aspect to this. Under Matt Garman, AWS has embraced a culture of innovation and experimentation. By taking bold risks in AI, Garman is signaling to the tech world—and to AWS employees—that the company is willing to bet big on transformative technologies. It’s a morale booster, showing that AWS isn’t just following trends; it’s shaping them.

The Risks Involved

Now, let’s talk about the elephant in the room: the risks. No bold strategy comes without its share of challenges, and AWS’s AI push is no exception.

First off, the financial risk is enormous. AI infrastructure, especially custom chip development, is capital-intensive. According to some estimates, developing a single AI chip can cost upwards of $500 million. While AWS has deep pockets, these investments won’t yield immediate returns. There’s always the risk that the market won’t adopt their custom solutions as quickly as anticipated, leading to underutilized resources and sunk costs.

Then there’s the issue of competition. AWS isn’t the only player in the game—far from it. Microsoft Azure has its own AI supercomputing infrastructure, and Google Cloud boasts advanced AI capabilities powered by its Tensor Processing Units (TPUs). AWS may have the lead in cloud market share, but its competitors are just as hungry for dominance in AI. In this high-stakes race, even a slight misstep could result in losing ground.

Let’s not forget about regulatory risks. With the growing scrutiny around data privacy and the ethical use of AI, AWS could find itself in the crosshairs of regulators. Any allegations of data misuse or bias in AI models could damage its reputation and lead to costly legal battles.

Another critical risk is talent. AI development requires top-tier talent, and the competition for skilled engineers and data scientists is fierce. AWS must not only attract but also retain these experts in a highly competitive job market. Losing key personnel could delay projects and give competitors an edge.

Lastly, there’s the risk of over-reliance on AI hype. While AI has immense potential, it’s not a magic bullet. Companies might overestimate what AI can achieve in the short term, leading to unmet expectations. AWS must strike a delicate balance between promoting its AI capabilities and managing customer expectations to avoid a backlash if results fall short.

Conclusion: A Calculated Risk

In summary, AWS CEO Matt Garman’s bold bet on AI is a fascinating blend of vision, strategy, and risk. The investments in custom chips, data centers, and partnerships showcase a company determined to lead the AI revolution. But this path is fraught with challenges, from fierce competition to regulatory scrutiny.

Whether AWS’s gamble pays off will depend on its ability to innovate, execute, and navigate these risks. One thing’s for sure: the stakes couldn’t be higher, and the tech world will be watching closely.