Exposed: DeepSeek’s AI Training Costs Are 400x Higher Than They Claimed!

Table of Contents

Introduction:

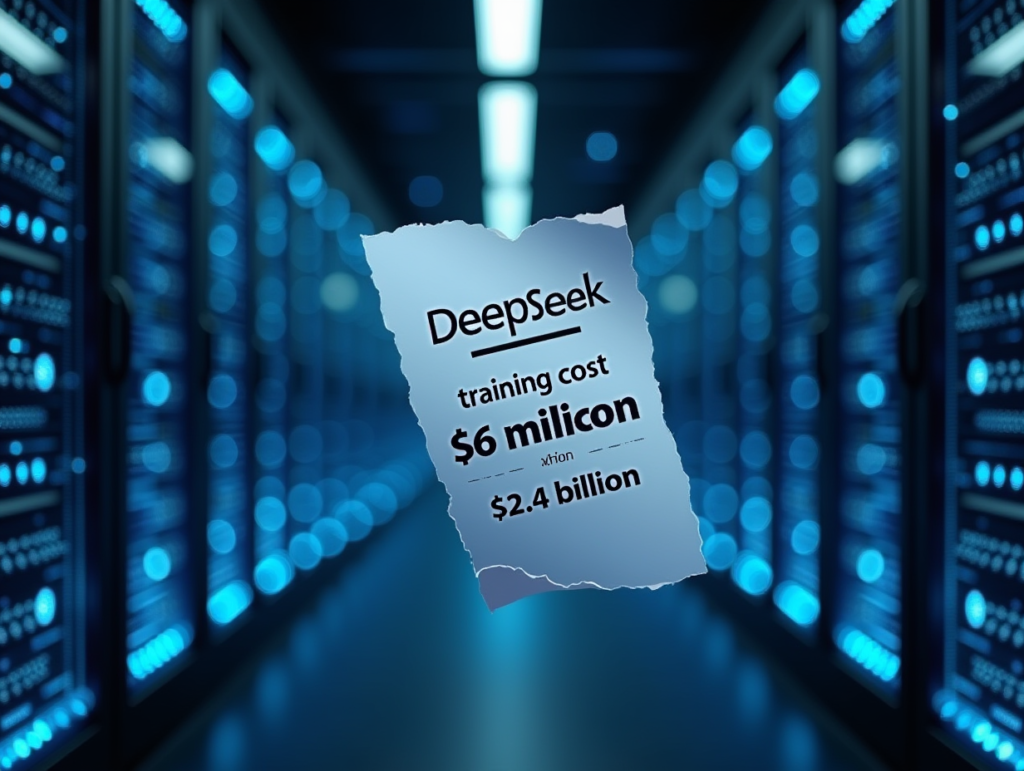

Artificial intelligence is moving at lightning speed, and companies are racing to develop the most powerful models at the lowest cost. DeepSeek, a relatively new player in the AI space, made headlines with claims that it trained a state-of-the-art model for just $6 million—an astonishingly low figure in an industry where costs often soar into the hundreds of millions.

But recent investigations suggest that DeepSeek’s AI Training Costs were not just a little off—but a staggering 400 times higher than what they reported.

This revelation has sent shockwaves through the AI community, raising urgent questions: Did DeepSeek Underestimate Its Real Costs? Was DeepSeek misleading investors? Did they manipulate their cost reports to gain an unfair advantage? And what does this mean for the future of AI development? Let’s break it down—fact by fact, dollar by dollar.

The $6 Million AI Training Myth

When DeepSeek first announced that it had trained a cutting-edge large language model (LLM) for just $6 million, the claim seemed too good to be true—and that’s because it was. Anyone even remotely familiar with the costs of training state-of-the-art AI models immediately raised an eyebrow. The math simply didn’t add up.

The Actual Costs of Training Similar Models

To put this into perspective, let’s take a step back and look at the actual costs of training similar models:

- GPT-3 (OpenAI) – Trained in 2020, estimated cost $12 million (and that was before the AI boom and hardware shortages drove up costs).

- GPT-4 (OpenAI) – Estimated training cost $100 million to $200 million in 2023.

- Gemini 1 Ultra (Google DeepMind) – Estimated $250 million in training costs.

- Claude (Anthropic) – Estimated $100 million+ for its most powerful models.

So when DeepSeek came forward and claimed that they had trained a model on par with GPT-4 for just $6 million, it was like someone saying they built a Ferrari for the price of a bicycle. The numbers didn’t just seem off—they were ridiculously unrealistic.

Now, if DeepSeek had simply said they had found a new way to optimize training or developed a revolutionary algorithm that dramatically reduced costs, the claim might have warranted cautious optimism. But that’s not what they did.

Instead, they put forth a vague and unverifiable statement suggesting that they had somehow trained a model with a fraction of the GPUs, energy, and computational resources required by their competitors—without providing any real evidence.

The Real Problem

Here’s why this is problematic:

- Training Costs Aren’t Just About GPUs – While GPUs are the most significant expense, there are many other hidden costs that add up. DeepSeek’s claim completely ignored energy costs, personnel, infrastructure, and data acquisition expenses, which are essential in training an LLM.

- Scaling Laws in AI – The field of AI follows well-established scaling laws, meaning more powerful models require exponentially more resources. DeepSeek’s model, if truly on the level of GPT-4, would have required massive computational power—far beyond what $6 million could cover.

- Red Flags in Market Response – When major tech investors and AI researchers first heard DeepSeek’s claim, they immediately called for proof. But DeepSeek didn’t provide any, which only made the situation more suspicious.

So why did DeepSeek make such an ambiguous claim? One word: hype. AI companies today operate in a fiercely competitive space, and attracting investment is often just as important as actual innovation. By claiming they had cracked the code to cheaper AI training, DeepSeek positioned itself as a an innovator, even if that meant bending the truth.

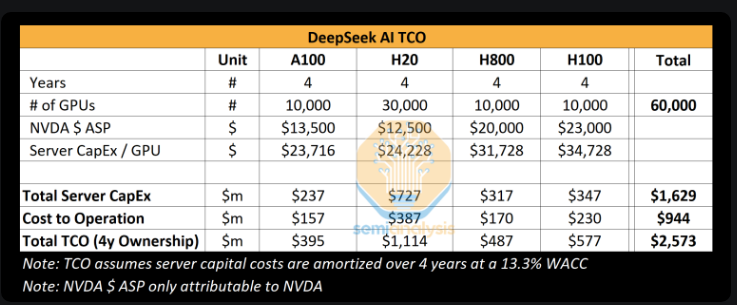

The Real Cost: $2.4 Billion, Not $6 Million

So if the $6 million figure was nonsense, what’s the actual cost of training DeepSeek’s model? According to multiple analyses, DeepSeek’s AI Training Costs were closer to $2.4 billion—400 times higher than what they claimed.

Breaking Down the Real Costs

- GPU Expenses – $1.5 Billion+

DeepSeek reportedly had access to 50,000 Nvidia H100 GPUs. These aren’t cheap—each one costs approximately $30,000.- 50,000 GPUs × $30,000 per GPU = $1.5 billion

- That’s just for the hardware—without factoring in maintenance, power, or cooling.

- Electricity Costs – Hundreds of Millions

Training a model at DeepSeek’s scale requires massive power consumption.- Each Nvidia H100 GPU consumes about 700 watts per hour.

- A single training run can last months, meaning total power consumption reaches hundreds of millions of kilowatt-hours.

- With an average electricity price of $0.12 per kWh, this could add up to $300 million+ in energy costs alone.

- Personnel and Research – $200 Million+

AI research is highly specialized, and hiring top-tier engineers and researchers isn’t cheap.- DeepSeek reportedly hired hundreds of AI experts, each commanding six-figure to seven-figure salaries.

- If their core team consisted of just 500 AI engineers at an average salary of $400,000 per year, that’s $200 million in labor costs.

- Cloud and Storage Costs – $100+ Million

- AI training doesn’t just require GPUs—it also requires massive cloud computing and storage resources.

- If DeepSeek used AWS, Google Cloud, or its own infrastructure, the cost would be tens to hundreds of millions.

When you total it all up, DeepSeek’s real training costs likely exceeded $2.4 billion. So why did they claim it only cost $6 million?

How Did DeepSeek underestimated the real costs?

At this point, the question isn’t whether DeepSeek underestimated the real costs.—it’s how they managed to get away with it. Let’s break it down.

One of the oldest tricks in the book is only reporting a fraction of the total expenses. DeepSeek may have counted only a small portion of the actual GPU usage in their calculations. They may have excluded energy costs, cloud expenses, and personnel salaries.

Reports suggest that DeepSeek may have acquired Nvidia chips through intermediaries in Singapore, allowing them to bypass certain regulations and obscure true costs. DeepSeek might have deliberately compared its cost structure to outdated or incomplete benchmarks. Instead of counting full-scale model training, they may have reported a single optimization run—a deceptive but effective PR move.

You might be wondering—why does any of this matter? After all, companies exaggerate their achievements all the time. But DeepSeek’s underestimation has serious consequences for the AI industry, investors, and even global AI regulations.

Conclusion: A Wake-Up Call for the AI Industry

The DeepSeek AI Training Cost underestimation is more than just an exaggerated claim—it’s a lesson in AI accountability.

Investors, researchers, and policymakers must start asking harder questions when companies make extraordinary claims. We need transparency in AI costs, not marketing hype. And most importantly, we need to ensure that the future of AI development isn’t built on deception.

DeepSeek may have underestimated its real costs—but as the truth comes out, the real question is: How will the AI industry prevent this from happening again?