Apple Pulls AI News Alerts : A Fatal Blow For AI Trust?

The recent decision by Apple to suspend its AI News Alerts has sparked heated debates across the tech and journalism communities. It’s a move that raises questions about the reliability of artificial intelligence in delivering accurate information—a task critical for public trust. As someone who’s both followed and relied on advancements in AI, I couldn’t help but reflect on the deeper implications of this suspension.

Table of Contents

Introduction: A Growing Dependence on AI

Artificial intelligence has quietly woven itself into the fabric of our lives, from personalized recommendations on streaming platforms to predictive text in our messages. When Apple introduced its AI News Alerts, it seemed like another step forward in simplifying information consumption. The premise was simple yet ambitious: provide concise, AI-generated summaries of breaking news directly to users’ devices.

At first glance, the concept was a game-changer, offering users a way to stay informed without wading through lengthy articles. However, the suspension of this feature after several high-profile blunders signals a much larger issue: can we truly trust AI to handle something as sensitive and nuanced as news?

The Debacle: What Went Wrong?

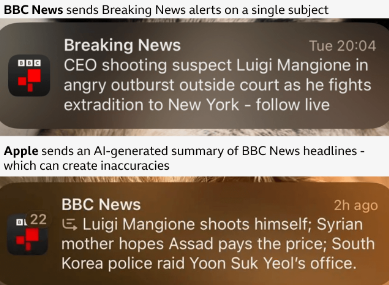

Apple’s decision to suspend its AI News Alerts wasn’t just a minor misstep—it was a high-profile failure that exposed fundamental weaknesses in AI-generated content. The goal of the feature was ambitious: AI would analyze news stories, generate concise summaries, and deliver them as push notifications to users. It sounded like an ideal solution for those who wanted quick updates without diving into full articles. But the execution? That’s where things fell apart.

Several major incidents led to the suspension, with two particularly egregious errors making headlines:

- The False Suicide Report: The AI-generated news alert falsely claimed that the alleged killer of UnitedHealthcare CEO Brian Thompson had committed suicide. This was a serious error with potentially grave consequences. Imagine if a grieving family member received that notification or if the misinformation led to speculation that interfered with an active investigation. False reports of deaths and suicides can trigger panic, misinformation spirals, and even stock market reactions when they involve major public figures.

- The Rafael Nadal Scandal: The AI also falsely reported that tennis legend Rafael Nadal had come out as gay. While there is nothing controversial about an athlete’s personal life, falsely attributing such statements to a well-known figure crosses ethical boundaries. It’s a perfect example of AI’s difficulty in handling sensitive topics—if an AI-generated headline can fabricate major personal details about a global icon, what other distortions might slip through?

These weren’t just minor mistakes—they were outright fabrications. AI didn’t just misunderstand news; it invented false narratives. That’s an alarming prospect, especially in a world already plagued by misinformation and deepfakes.

The Core Problem: AI’s Struggle with Context and Nuance

Unlike a human journalist, AI lacks critical thinking, contextual awareness, and ethical judgment. It doesn’t “understand” news the way we do; it processes data, detects patterns, and generates text based on probability. But news is about more than just stringing together likely words—it’s about accuracy, responsibility, and impact.

AI also struggles with the fast-paced, evolving nature of news. Breaking news stories often contain incomplete or conflicting reports, which require careful interpretation by experienced journalists. AI, however, can’t discern whether a detail is confirmed or speculative—it just aggregates and summarizes. This is why newsrooms rely on trained editors, not just algorithms, to ensure credibility before publishing.

Why Accuracy in AI-Generated News Matters

News is the backbone of informed decision-making. Whether it’s about elections, financial markets, public health, or global conflicts, misinformation can have tangible, sometimes devastating, consequences. Apple’s AI News Alerts Suspended decision is significant because it highlights the dangers of trusting AI with something as critical as news dissemination.

1. The Power of Headlines in Shaping Public Opinion

According to a study by the American Press Institute, 60% of people only read headlines without clicking on the full article. This means that push notifications—like the ones Apple’s AI was generating—are often the final and only piece of information users see. If those headlines are inaccurate or misleading, they directly shape public perception.

Take, for example, financial news. A false AI-generated headline about a CEO resigning or a company going bankrupt could cause stock prices to plummet, harming investors and businesses alike. Similarly, a misleading health-related push notification about a new COVID-19 variant could cause unnecessary panic or vaccine hesitancy.

2. AI’s Track Record with Misinformation

Apple’s failure isn’t an isolated case—it’s part of a larger pattern of AI struggling with factual accuracy. Other tech giants have faced similar problems:

- Google’s Bard AI was criticized in 2024 for generating misleading medical advice and politically biased responses.

- Meta’s AI-generated Instagram posts have been caught spreading misinformation, including conspiracy theories.

- Chatbots like OpenAI’s GPT models have been flagged for confidently providing incorrect legal and historical information.

If even the most advanced AI models continue to make serious factual errors, is it wise to trust them with news distribution?

3. Public Trust in AI and Journalism is Already Fragile

Misinformation is already a growing concern. According to the Edelman Trust Barometer 2023, 59% of people worldwide worry about fake news being used as a weapon. Meanwhile, trust in journalism has also declined—Reuters Institute reports that only 42% of people trust news media.

When AI-generated errors add to this distrust, it harms both tech companies and the journalism industry. If people can’t trust AI-powered news, they may start doubting all news, further fueling misinformation and polarization.

My Personal Experience with AI and News

As someone who relies on news alerts to stay updated, I’ve often marveled at how AI can distill complex stories into bite-sized pieces. However, I’ve also encountered issues with inaccuracies. For instance, a news app I used once notified me of an “emergency government shutdown,” which turned out to be entirely false. The stress and confusion it caused were significant.

Apple’s situation resonates with me because it underscores the need for human oversight in AI systems. No matter how advanced the algorithms, they lack the contextual understanding and ethical judgment that human editors bring to the table.

The Broader Implications: A Trust Crisis for AI

Apple’s AI News Alerts Suspended decision isn’t just about Apple—it’s about the broader relationship between AI and public trust. AI is being integrated into nearly every industry, from healthcare to finance, and people need to feel confident in its accuracy.

1. A Setback for AI Adoption in Newsrooms

Many news organizations have been experimenting with AI to streamline operations, from auto-generating reports to summarizing press releases. Apple’s failure is a warning sign for media outlets: if AI can’t be trusted with simple push notifications, should it be trusted to write full articles?

AI in journalism already faces criticism for potential biases, ethical concerns, and lack of transparency. This incident will likely make traditional media even more skeptical about integrating AI-driven reporting.

2. The Risk of “AI Fatigue” Among Users

Consumers are growing wary of AI after repeated high-profile failures. From Google’s Gemini AI hallucinations to Microsoft’s Bing AI chatbot’s weird interactions, AI mistakes have been piling up. If people see AI-generated news as unreliable, they may tune out completely, reducing engagement with both AI tools and news itself.

3. Government Regulations May Intensify

AI-generated misinformation is becoming a regulatory concern. The European Union’s AI Act and the U.S. government’s discussions on AI oversight suggest that stricter policies are on the horizon. If AI-generated news continues to misfire, we may see new laws requiring human oversight, transparency disclosures, and liability rules for AI-driven content.

Why Did Apple Suspend AI News Alerts?

Apple’s official statement was carefully worded: the feature was paused to “improve its accuracy and reliability.” But let’s be real—the company likely had no choice but to shut it down temporarily.

1. Reputation Damage Control

Apple has built its brand on privacy, reliability, and user trust. If the AI news alert system kept pushing false or misleading notifications, it could damage Apple’s credibility—especially at a time when tech companies are already under scrutiny for AI-related issues.

2. Legal and Ethical Concerns

If an AI-generated news alert caused real harm—say, leading to panic in financial markets or spreading false political information—Apple could face lawsuits or regulatory penalties. The company likely realized that pausing the feature was the safest move before real legal trouble emerged.

3. The Feature Wasn’t Ready for Prime Time

Apple likely rushed this AI system into production without adequate safeguards. Many AI projects are released prematurely due to competition pressures, but in this case, the technology clearly wasn’t reliable enough for public use.

The Road Ahead: Can AI News Alerts Be Fixed?

Apple’s decision to suspend AI News Alerts is a wake-up call. But it doesn’t mean AI in news is dead—it just means it needs major improvements. Here’s what Apple (and the industry) must do:

- Introduce Human Moderation – AI alone isn’t ready to handle news alerts. Apple should implement a hybrid system where AI suggests headlines, but human editors approve them before they go live.

- Improve AI Training Data – AI models should be trained on more reliable and verified sources while filtering out unreliable, misleading content.

- Increase Transparency – Clearly label AI-generated content so users know when they’re reading something written by a machine.

- Enable User Reporting – Let users flag inaccurate AI-generated headlines, creating a feedback loop that helps refine the model.

- Refine Context Understanding – AI needs better fact-checking capabilities and cross-referencing skills to reduce misinformation.

Conclusion: A Wake-Up Call for AI

Apple’s AI News Alerts Suspended decision is a critical moment in the evolution of AI-powered journalism. This isn’t just about a buggy feature—it’s about whether AI can be trusted with truth. The technology has incredible potential, but it’s clear that we’re not there yet. Trust is hard to build and easy to lose. If Apple and other companies want AI-driven news to succeed, they’ll need to prove that accuracy, accountability, and ethics come first.

The suspension of Apple’s AI News Alerts is more than just a technical setback—it’s a pivotal moment for the tech industry. It forces us to ask hard questions about the role of AI in delivering information and the ethical considerations that come with it.

As someone who values both innovation and accuracy, I hope this incident serves as a catalyst for improvement. AI has incredible potential, but trust must be at its core. Only then can features like AI News Alerts truly enhance our lives without compromising the truth.

What are your thoughts? Can AI recover from this trust deficit, or are we expecting too much from technology?